M1: An Architecture for a Living Android's Mind

by Patrick M. Rael (pmrael@aol.com)

M1: An Architecture for a Living Android's Mind

by Patrick M. Rael (pmrael@aol.com)

Abstract: The goal of the M1-Project is to create a physical

and virtual Android Robot which can be programmed to perform the creative

behaviors somewhat similar to those in people. The system will have

a physical presence. The system must be able to adapt to new situations.

It must be able to re-program itself. The Android's Mind must be

designed such that given enough time and education it can become an expert

in fields of study and get advanced degrees. A balance must be struck

between too little initial programming, resulting in decades or centuries

of learning, and too much initial programming resulting in too much change

required for new learning to occur.

The M1 Android Robot Mind Architecture as outlined below is a work in progress. Ten areas have been identified as being significant or helpful in order to program a sentient robot. These ten components will form the foundation of the android's "Mind". It is critical to note that the android's mind is no mere I/O function. The M1 Mind will not be a simplistic "if A then 1, elseif B then 2, ...". Nor will M1 be a simplistic word pattern-matcher. Rather, M1 will consist of a physical presence in a defined dimensional universe and will be heavily oriented toward learning about itself, others, the world and the universe.

Preface

Introduction

1. Spatial-Extents

2. Species-Recognition

3. Survival

4. Temporal-Extents

5. Analogy-Processor

6. Mind-Casts

7. Brain Store

8. Sensory I/O

9. Focus

10. Language

M2

M3

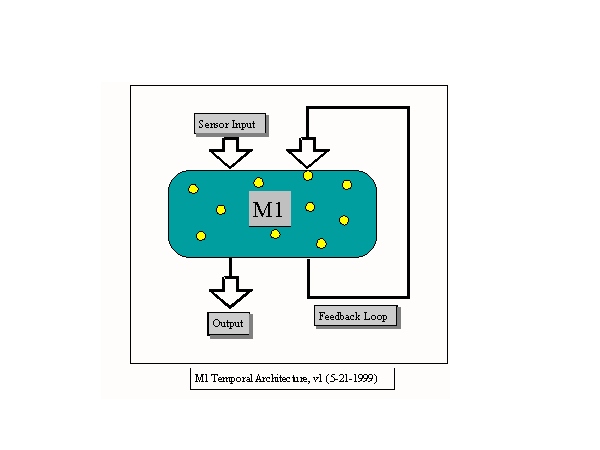

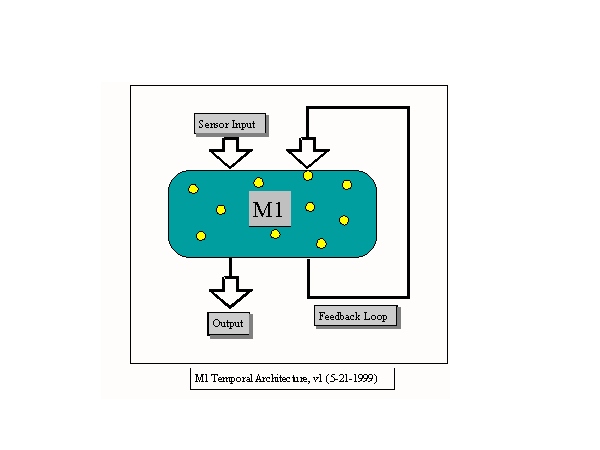

The design of the Temporal Architecture is very simplistic at this point. It will be further refined and detailed in the coming weeks and months. However, there is enough detail even at this early stage to see that the M1 Architecture is no mere I/O function. The Feedback Loop provides a potentially continuous processing mechanism regardless of Sensor Input and Output. ie; The M1 Mind can be functioning and active regardless of any current input and output.

1. Mind-Casts

1. Scientific

2. Religious

3. Warrior

4. Political

5. Business

6. Artistic

7. Bios

8. Herd

9. Self

10. Partner

These Mind-Casts represent a high-level overview

of the categories of behaviour and information processing. I think

it's critical to identify and differentiate between these categories of

behavior because focusing on only one should prove to be a major complexity

reduction when it comes to implementing these. I propose that the

Scientific Mind-Cast may be the easiest to implement because there is less

randomess to code than in the other mind-casts. The Scientific mind

is the epitomy of objectivity, like a finely tuned machine.

Sometimes when trying to solve a complex system,

it helps to have a focused, short-term goal. Otherwise, the complexity

may be overwhelming. A good short-term goal which encompasses several

of the components of the M1 Mind Architecture is the following:

As a first milestone of the M1 Mind Architecture, I'd like to be

able to place the computerized Android Robot head in any room

and let the robot identify 8 things with it's sensors:While the Robot is doing this, it will be building a fully 3-D

- Ceiling

- Floor

- Walls

- Doors/doorways

- Windows

- Tables

- Chairs

- People

representation of what it perceives around it. This will be called

the Robot's Minds-Eye. This 3-D representation

is to be visible on the computer screen so that anyone can see

what the Robot perceives.For example, if the Robot thinks a table is in the room, then it will

create a 3-D table object in it's Mind's Eye. If the Robot thinks a

person is in the room, it will create an Avatar to represent that

person. Note that the Robot can be wrong. It may think a person

is in the room when only lifesize picture of a person is present.The Robot's Mind's Eye operates under a system of Perceptual

Assumptions. It is not necessary for the Robot' Mind's Eye to be

100% accurate; only that the Robot act on it's perceived reality.

The Robot may ask for help in identifying things and people.

Discussion:

I don't think it's absolutely

necessary for there to be a physical component for life.

I can imagine non-physical

forces such as gravity, magnetism, etc, which I wouldn't

want to rule out as being

potential domains of life forms. However, at the moment

it will be exceedingly difficult

to build such a non-physical based life form with current

technology. Therefore,

a physical-based life form will be attempted.

Discussion:

Most of the life forms we can currently study have the ability to recognize

others

of their own species. This enables reproduction. While the

android robot we're

building is not going to have it's own reproductive capabilities, it will

none-the-less be

useful for it to recognize it's own species, and it's creator species.

Discussion:

The Android robot will need an energy source. It's not going to be

much use if

it has a constant umbilical cord connected into the wall. It

will be sufficient for

the robot to periodically replenish it's power sources. Three times

daily is acceptable.

We can call that a Power-Breakfast, Power-Lunch, and Power-Dinner.

Snacks

are ok too.

The

robot isn't going to be of much practical value if it readily disassembles

itself.

An aversion (fear-of) to dismemberment will have to be encoded.

Discussion:

Many early prototypes of intelligence were in the form of programs which

pretended to carry on a discussion with people. The person types

input into the

program and the discussion ensues. Most of these programs suffer

from the

Black-Box-Syndrome: the thinking is that one MUST model intelligence as

if it

could live in a black box. A very brilliant person invented this

black-box system.

Unfortunately, this person didn't himself provide an implementation using

this system.

Many

of these programs lack the Temporal Extents component: they will wait

indefinetly for input. The ones which won't wait indefinitely usually

have a simple

chronometer-based timeout. I don't think M1 should have a simple

chronometer

based temporal system. I think M1's Temporal Extents system is at

least 1 level

of complexity more complex than a simple chronometer.

Discussion:

Analogies are useful for understanding something new in terms of it's relation

to

something already known.

1. Mind-Cast Categories with a

sampling of some properties.

1. Science* - Facts, proof, hypothesis, theory, objectivity, rigor, mathematical,

logic, deduction,

induction, axioms, education, cause-effect.

2. Religion - Belief, faith, salvation, mysticism, happiness, perception,

appearance, good/evil,

super-coordinator, ways to live, subjective codes of conduct, multi/after-lives,

balance with

nature, angels, spirits, ghosts, super-natural, purposeful, creationism,

fear of eternal fire/plasma

and/or punishment.

3. Warrior - Military, battle, superiority, conquer, fight, survival, defend,

intimidation, force,

fight or flight.

4. Political - Authority, popularity, control of others, appearance, polls,

election, coup,

public service/control.

5. Business - Profit, wealth, material, money, greed, transactions, marketing,

commerce, labor.

6. Art - Subjectivity, appearance, creativity, originality, imprecision,

entertainment, authoring.

7. Bios - Body, health, sports, pleasure, pain, reproduction, appearance

of, organic, gut,

maintenance.

8. Herd - Mob, hive, collective, self-less, one-of-many, strength-in-numbers,

the flow, what

others think and are doing is of supreme importance.

9. Self - I, me, yo, self-ish, the center of the universe is ME.

10. Partner - Significant other, extended self, family.

2. Mind-Cast System

Analysis Methodologies (SAM) - Certain MCs are associated with

methodologies in analyzing systems. For example, in Science the scientific-method

prevails

in analyzing systems. Certain SAMs below are generic and may apply

to all MCs. The

information below is preliminary and subject to revision.

1. Give Things A Name (GTAN) - Give things a name or find the name of things.

2. Principle of Variables (PV) - Find the variable (that which can

vary) properties in

things. Consider the system's state when the values of the variables

are changed.

3. Hierarchies

1. Generalize-Categorize (GC) - The more general category.

2. Specialize-Instantiate (SI) - The more specific example.

4. Domain-Analysis (DA) - A system for analyzing the legal and illegal

domains

of systems.

5. Idealism (Id) - A system for decoupling what one wants or what should

happen

from how to achieve it or how it will come about.

6. Ways of Choosing (WC) - A system for making choices.

1. Does the choice have to be correct? Does a wrong choice exist?

2. Is this a Defining choice? Is any choice okay?

3. Is there a set or range(es) to choose from?

7. Cause and Effect modeling.

8. Logic

9. Scientific Method

10. more ...

Discussion:

In any Android Robotic project, eventually the point is reached where behavioural

modeling is addressed. Unless all behaviours are fair game [not necessarily

a bad

choice] then the domain of human behaviours is usually identified next.

Then a

categorization of the large number of behaviours may ensue, from which

it can be

observed that not all of the domain of behaviours are worth modeling.

Discussion:

It would be very useful if it were possible to debug the robot's mind while

it's

running. Ideally, I'd like to implement the Brain-Store as a simple

Unix File

System. This would allow the use of the advanced Shell tools.

Also, the idea

of tar'ing the file system to tape is appealing. I'm going to work

hard to see

this through.

Discussion:

Motor control is implied. In the beginning, video-cameras, microphones,

speakers,

buttons for skin, and gyroscopes will have to suffice for Sensory I/O.

There

is a common misconception about Skin I'll clear up now. Many

people assume

incorrectly that a skinned robot is more advanced than a metal or plastic

framed robot.

This is incorrectly interpolated from the Human Superiority Complex.

There are many

reasons why Skin isn't superior to durable metal: durability for one.

Discussion:

This module is going to be the tough one. Nine other components have

been removed

from it and all that remains is the root core of how I'm going to define

sentience. This elusive

component is arguably the holy grail of Artificial Intelligence.

Good luck Pat! Thanks, I'll

need it.

Discussion:

I'm convinced that many people think in a stream of networked concepts.

Mixed into

that is language idiosyncracies. So I think it's not just language,

and not just concepts, but

it's a mixture of the two. However, my first cut at this will

be a highly modular pair of

Concepts and Language. It's not clear to me how to proceed any other

way while still

providing a system powerful enough to model any language. Most systems

lock-in to

a specific language very early. This system being designed for M1

will not lock-in to

any particular language ever!

1. Honor

2. Loyalty

3. Modesty

4. Stubborn

5. Dignity

6. Pride

...

1. A robot must not harm a human being, nor through

inaction allow a human to come to harm.

2. A robot must do what it's told by a human being,

except where that would conflict with law 1.

3. A robot must protect itself, except where that

would conflict with laws 1 and 2.